Emotion Tracking: Mobile Electrodermal Activity (mEDA) and Facial Expressions

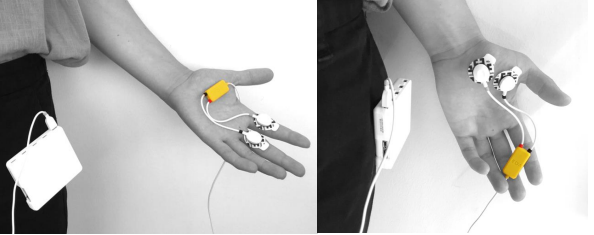

The GIVA research group uses a mobile sensor system to record galvanic skin responses (GSR) for mobile indoor/outdoor studies BITalino EDA Sensor, and facial expression recognition software for online settings Motions Affectiva.

The images below are of PhD student Sergio Bazzuri.

.jpg)

Technical Specifications of the BITALINO electrodermal activity (EDA) sensor

- Arduino-compatible

- Resistance measurement

- 2-lead electrode cable operation

- Gain: 2

- Range: 0-13uS

- Bandwidth: 0-2.8Hz > Range: 0-25µ𝑆 (@VCC = 3.3V)

- Measurement: continuous

- CMRR: 100dB

- Input impedance: >1GOhm

-

Range: 0-25μS (with VCC = 3.3V)

-

Bandwidth: 0-2.8Hz

-

Consumption: ±0.1mA

-

Input Voltage Range: 1.8-5.5V

- Electrodes:

- Placement on the inside of fingers

- Gelled Self-Adhesive Disposable Ag/AgCl Electrode

Figure: BITALINO EDA Sensor from PLUX Biosignalshttps://www.pluxbiosignals.com/products/psychobit?_pos=3&_sid=7a23415f9&_ss=r, accessed 21.07.2025).

BITALINO EDA Sensor Electrodes

BITALINO EDA Recording

The BITALINO EDA sensor can be connected to AcqKnowledge (see Stationary Electrodermal Activity (EDA) to record data.

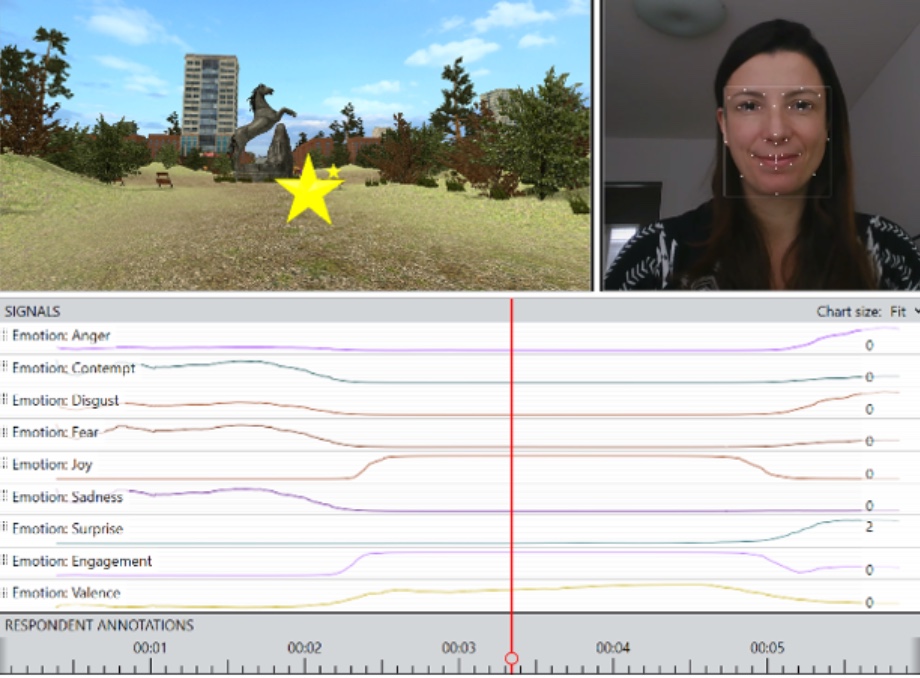

iMotions Affectiva AFFDEX

-

Affectiva AFFDEX uses automated facial coding metrics to recognize 18 facial expressions. These are equivalent to Action Units described by the Facial Action Coding System (FACS). This algorithm collects significant occurrences of facial expressions that occur at approximately the same time. From those facial expressions, it derives 7 core emotions, i.e., joy, anger, fear, disgust, contempt, sadness, and surprise. It also provides an overview of the overall expressed emotional response with the two summary scores of engagement (i.e., a participant’s expressiveness) and valence (i.e., positiveness or negativeness of a participant’s emotional experience).

-

Affectiva AFFDEX allows you to:

- Getting insights into the consistency of facial expressions elicited by the provided sensory stimulus, and the underlying valence and intensity of the emotional response.

- Collecting data in the lab or online through the iMotions Online Data Collection (ODC) tool.

- Getting real-time feedback on calibration quality for highest measurement accuracy.

- Visualizing raw and aggregated data in the moment and after data collection.

- Get a statistical report of the data such as the number of participants who showed a given emotional response such as joy or sadness in a certain time window.

- Synchronizing and managing multiple data streams from any biometric sensor in combination to facial expressions such as eye tracking data and EEG data.

Figure: A screenshot of the iMotions software, showing how the Affectiva algorithm classifies a user's facial expressions in real time while she is navigating through a virtual city online.